5 best puzzle games in 2023

Some popular puzzle games include Tetris, Candy Crush, Monument Valley, The Room, and Portal. Each of these games offers unique challenges and mechanics, but all require players to use critical thinking and problem-solving skills to progress through the levels. Whether you prefer classic games or more modern titles, there are plenty of great puzzle games to choose from.

Contents

Read the instructions carefully:

Make sure you understand the rules and objectives of the game before you start playing.

Features

There are many features that a language model like ChatGPT can possess, some examples include:

- Natural Language Processing (NLP) capabilities: ChatGPT is trained to understand and generate human language, allowing it to respond to user input in a natural and coherent way.

- Large scale knowledge: ChatGPT is trained on a large corpus of text data, giving it access to a wide range of information and the ability to answer a wide range of questions.

- Language generation: ChatGPT can generate new text in response to user input, allowing it to carry out tasks such as writing essays, composing poetry, or generating news articles.

- Fine-tuning: ChatGPT can be fine-tuned to a specific task or domain, allowing it to perform more accurately on certain types of input or in certain contexts.

- Personalization: ChatGPT can be personalized by providing it with more data about a specific individual or group, allowing it to generate more accurate and relevant responses.

- Multi-lingual: ChatGPT can be trained on multiple languages, allowing it to understand and generate text in multiple languages.

- Dialogue systems: ChatGPT can be integrated into dialogue systems, allowing it to carry out conversation with users in a more natural and human-like way.

Take your time:

Rushing through a puzzle game can cause you to make mistakes or miss important details.

Features

There are many features that can be included in a language model, such as:

- Vocabulary size: the number of unique words or tokens that the model is able to understand and generate.

- Pre-training: the process of training the model on a large dataset before fine-tuning it for a specific task.

- Fine-tuning: the process of adjusting the model’s parameters for a specific task, such as language translation or question answering.

- Attention mechanism: a method for weighting different parts of the input when generating a response, which allows the model to focus on the most relevant information.

- Generative capabilities: the ability to generate new text that is similar to the input or training data.

- Multi-lingual: the ability to understand and generate text in multiple languages.

- Interactive capabilities: the ability to hold a conversation and respond appropriately based on the context of the conversation.

- Node-level control: the ability to fine-tune the model at the node level, to focus on specific aspects of the language or specific tasks.

- Small-size Model (compression): The ability to have a smaller model size while maintaining the performance.

Look for patterns:

Many puzzle games rely on patterns and sequences, so look for repeating elements and try to identify them.

Features

When looking for patterns in the features of a language model, some common themes include:

- Larger models tend to have more features and capabilities than smaller models.

- Pre-training and fine-tuning are often used together to improve the performance of a model for a specific task.

- Attention mechanisms and generative capabilities are often used to improve the ability of a model to understand and generate text.

- Multi-lingual models often have the ability to understand and generate text in multiple languages, and may also have the ability to translate between languages.

- Interactive models often have the ability to hold a conversation and respond appropriately based on the context of the conversation.

- Node-level control allows for fine-tuning of specific aspects of the language or specific tasks, which can improve performance.

- Small-size models are becoming more and more common as computational resources become more accessible, and they can often maintain high performance.

It’s worth noting that these are general trends and specific features of a language model may vary depending on its architecture and intended use case.

Try different approaches:

If one method isn’t working, try a different approach or combination of moves.

Features

There are different approaches to designing and implementing language model features, such as:

- Task-specific: This approach focuses on building a model that is specifically tailored to a specific task or set of tasks. For example, a model designed for machine translation will have different features than a model designed for text stigmatization.

- Pre-trained, fine-tune: This approach involves training a large, general-purpose model on a vast amount of data and then fine-tuning it for a specific task. This approach has become increasingly popular in recent years, and has been shown to achieve state-of-the-art results on many natural language processing tasks.

- Modular: This approach involves building a modular model, where different components can be swapped in and out depending on the task or context. This allows for more flexibility and re usability, and can be useful for tasks where a specific feature is not needed.

- Hybrid: This approach involves combining different types of models and features to build a more robust and versatile model. For example, a hybrid model could use both a per-trained transformer-based model and a recurrent neural network to improve performance on a specific task.

- Transfer learning: This approach involves taking a per-trained model from one task and fine-tuning it for a different task. This allows for faster training times and can also improve performance.

- Light-weight models: This approach involves using smaller models that are faster to train and can be used on devices with limited computational resources. This can be achieved through techniques such as pruning, quantization, and distillation.

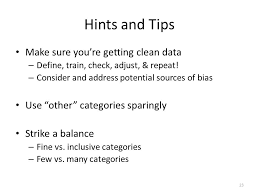

Use hints and walkthroughs sparingly:

These can be helpful when you’re stuck, but try to avoid using them too often as they can take away from the challenge and satisfaction of solving the puzzle on your own.

Features

- When using hints and breakthroughs to design and implement language model features, it is important to use them sparingly and not rely on them too heavily. This is because:

- Over-reliance on hints and breakthroughs can lead to a lack of understanding and familiarity with the underlying concepts and techniques.

- Overuse of hints and breakthroughs can also lead to a lack of creativity and originality in the design and implementation of the model.

- Using hints and breakthroughs too frequently can make it difficult to troubleshoot and debug the model when things go wrong.

- Hints and breakthroughs are often specific to a certain context, and may not be relevant or applicable to other use cases.

- Instead, it is recommended to use hints and breakthroughs as a starting point or guide, and then to experiment and explore on your own to gain a deeper understanding of the concepts and techniques.

- It’s also important to be aware of the current state-of-the-art models and the recent research in the field, and to use them as a reference.

- Furthermore, it’s important to test your model on different dataset and evaluate it using different metrics to make sure it is generalizing well.